RAG

RCG(Retrieval-Centric Generation)检索增强生成

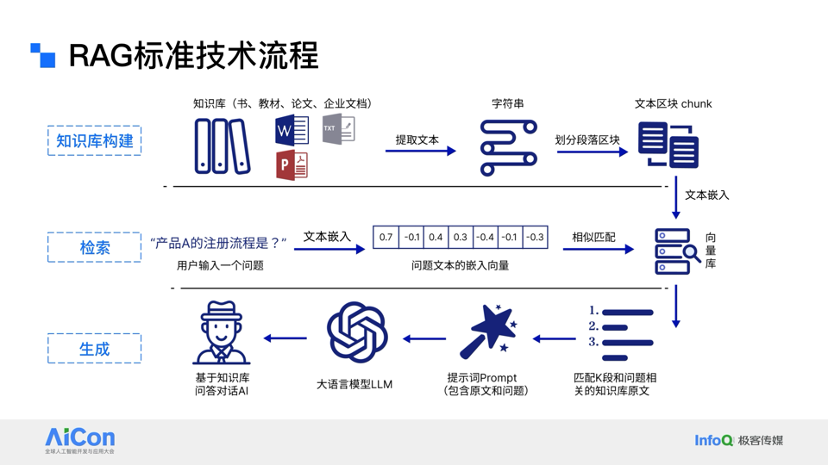

RAG 的核心流程可以简化为以下三个步骤:

- 知识库构建(Indexing):对外部文档进行解析、清洗、向量化,构建高质量的索引。

- 检索(Retrieval):根据用户的查询,在向量空间中检索最相关的内容。

- 生成(Generation):大模型基于检索结果生成最终的回答。

LlamaIndex 打造个人知识库离线 RAG

LlamaIndex 是一个开源大模型应用数据框架

https://github.com/run-llama/llama_index

import loggingimport osimport sysfrom llama_index.core import SimpleDirectoryReader, VectorStoreIndex, Settingsfrom llama_index.core import StorageContext, load_index_from_storagefrom llama_index.embeddings.huggingface import HuggingFaceEmbeddingfrom llama_index.llms.llama_cpp import LlamaCPPlogging.basicConfig(stream=sys.stdout, level=logging.DEBUG)logging.getLogger().addHandler(logging.StreamHandler(stream=sys.stdout))llm = LlamaCPP(model_path=r".\Llama-3.2-3B-Instruct-F16.gguf",)embed_model = HuggingFaceEmbedding(model_name="aspire/acge_text_embedding")Settings.embed_model = embed_modelindex_dir = "./index"if os.path.exists(index_dir):storage_context = StorageContext.from_defaults(persist_dir=index_dir)index = load_index_from_storage(storage_context)else:documents = []reader = SimpleDirectoryReader(input_dir="./data",recursive=True)for docs in reader.iter_data():documents.extend(docs)index = VectorStoreIndex.from_documents(documents, embed_model=embed_model)index.storage_context.persist(persist_dir=index_dir)query_engine = index.as_query_engine(llm=llm)messages = [{"role": "system", "content": "你是一个个人知识库查询助手,请根据用户的输入,回答相应的问题!"},{"role": "user", "content": "如何升级cuda版本?"},]response = query_engine.query(str(messages))print(response)